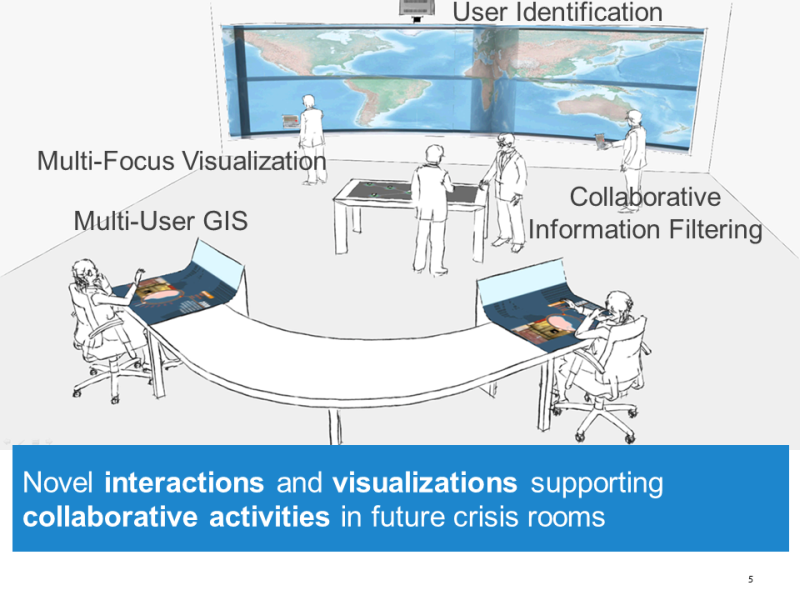

Interactive tables or large displays are great for exploring and interacting with urban data, for example in urban observatories or exhibitions. They turn working with maps, visualizations, or other urban information into a fun and social experience. However, such interactive tables or large displays are also expensive – much too expensive for schools, public libraries, community centres, hobbyists, or bottom up initiatives whose budgets are typically small.

We therefore asked ourselves how we could use the countless tablets and smart phones that are typically idling away in our pockets and bags to compose a low-cost but powerful multi-user and multi-device system from them. How can we enable users to temporarily share their personal devices for creating a joint cross-device system for a social and fun data exploration?

Video of HuddleLamp demo applications

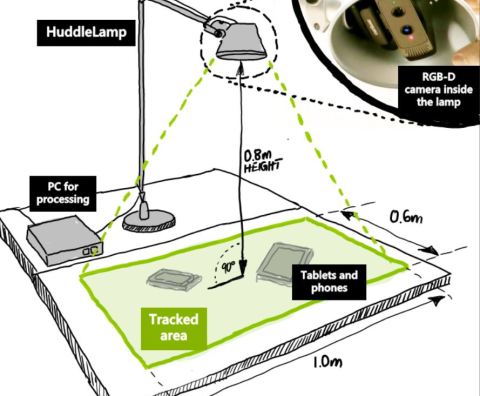

Our result is HuddleLamp, a desk lamp with an integrated low-cost depth camera (e.g. the Creative Senz3d by Intel). It enables users to compose interactive tables (or other multi-device user interfaces) from their tablets and smart phones just by putting them under this desk lamp.

Technical setup of HuddleLamp with an integrated RGB-D camera (Tracking region: 1.0×0.6m).

HuddleLamp uses our free and open source computer vision software to continuously track the presence and positions of devices on a table with sub-centimetre precision. At any time, users can add or remove devices and reconfigure them without the need of installing any apps or attaching markers. Additionally, the users’ hands are tracked to detect interactions above and between devices.

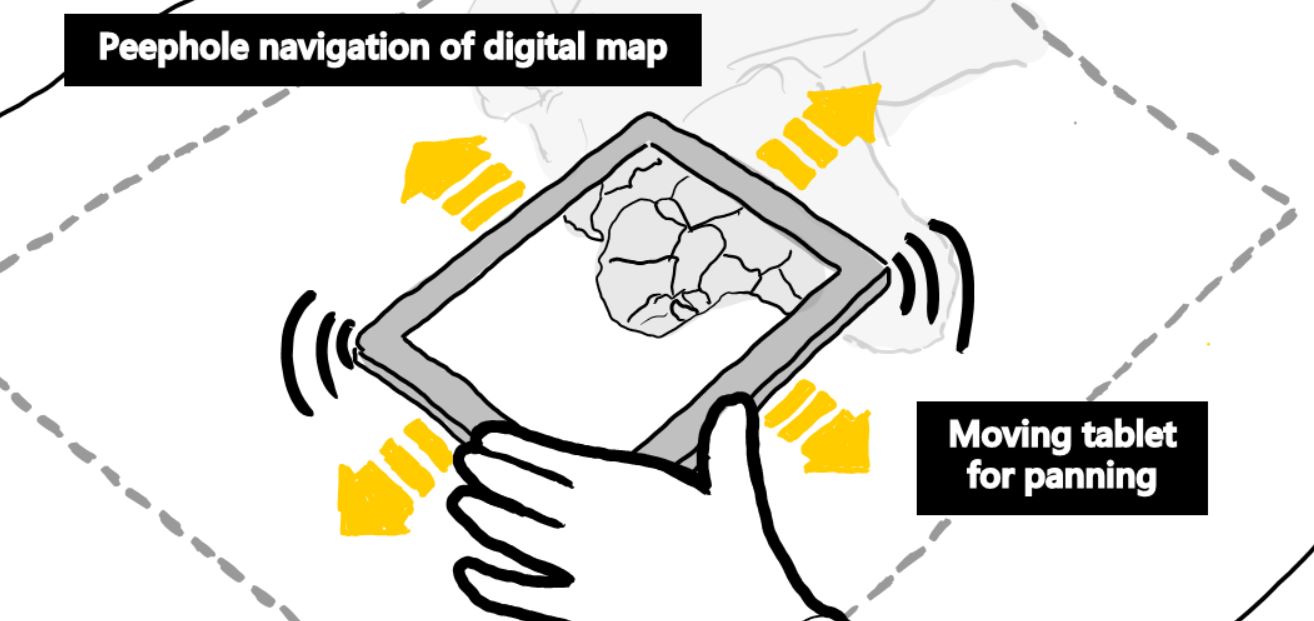

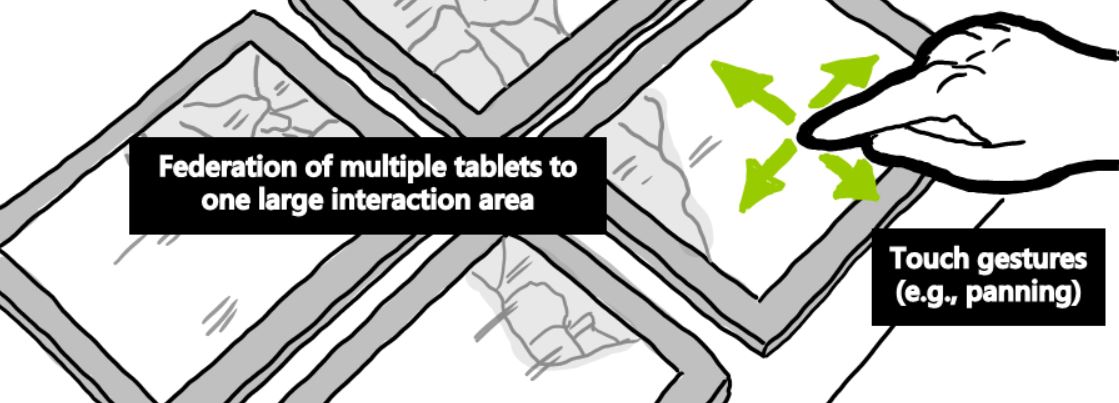

All this information is provided to our free and open source Web API that enables developers to write cross-device Web applications. These applications can use all this information to become “spatially-aware”. This means that the applications can react to how the devices are arranged or moved in space. For example, physically moving a tablet on a desk can also pan and rotate the content of the screen, so that each device appears to be a kind of peephole through which users can view a spatially-situated virtual workspace. When putting multiple tablets or phones side-by-side, these peepholes turn into one huddle or federation of devices and users can interact with them as if they were just one large display.

Peephole navigation and using multiple tablets as one tiled display.

HuddleLamp was created by Hans-Christian Jetter of the Intel ICRI Cities at UCL in London and Roman Rädle of the Human-Computer Interaction Group of the University of Konstanz together with colleagues from the UCL Interaction Centre.

Thanks to the great work of our student research interns Oscar Robinson (UCL), Jonny Manfield (UCL), and Francesco De Gioia (University of Pisa) who visited the ICRI during Summer 2014, we are happy to not only present HuddleLamp in a talk at the ACM ITS Conference 2014 but also to give a live demonstration there.

HuddleLamp is a first step towards a “sharing economy” for excess display and interaction resources in a city. We envision that in future cities users will be able to seamlessly add or remove their devices to or from shared multi-device systems in an ad-hoc fashion without explicit setup or pairing. Instead this will happen implicitly as a by-product of natural use in space, for example, by bringing multiple devices to the same room, placing them side-by-side on a table or desk, and moving them around as needed. Ideally, users will experience these co-located cooperating devices and reconfigurable displays as one seamless and natural user interface for ad-hoc co-located collaboration.

After having created our free and open source base technology, we are now looking at creating and studying examples for the visual exploration of urban data. Our goal is to enable citizens to create their own bottom up urban observatories for community engagement and activism in spaces such as schools, public libraries, community centres, or museums.

Further reading:

To learn how to build your own HuddleLamp and HuddleLamp applications, please visit: http://www.huddlelamp.org or join the HuddleLamp Facebook group.

The ITS paper on HuddleLamp is also available here.